Understanding and generation of audio-visual multimedia content is the key technology of the "Meta-verse." We are interested in virtual human generation techniques, and our goal is to generate concert performance animation videos from only one picture and one song, without sophisticated 3D scanning devices, multi-view stereo algorithms or wearable motion capture devices. Such techniques will bring an important progress to the 3D animation industry, film industry and even the medical industry. We particularly focus on two directions: 3D body movement generation and 3D human pose and shape estimation. In the first direction, given the music audio or score information, the virtual human is trained to understand how to play the music and generate the corresponding body movement. In the second direction, a digitized 3D human body is reconstructed by taking a simple 2D picture/video. In this talk, we will discuss these techniques and present our latest results.

Activity Information

Speaker

Speaker

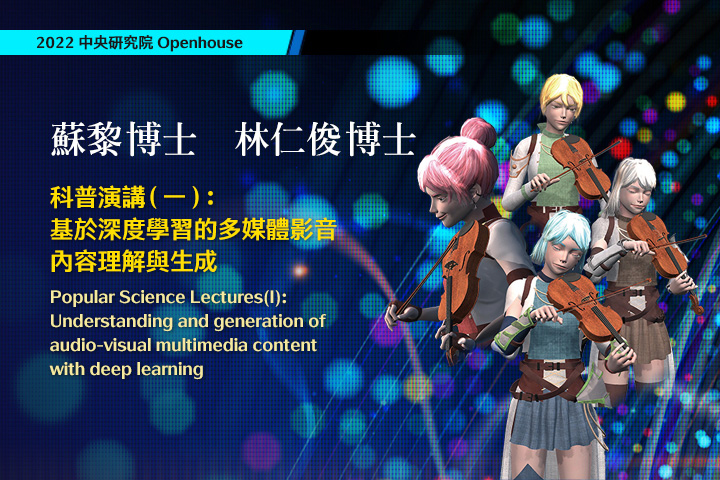

Dr. Li Su、Dr. Jen-Chun Lin

Time

Time

10/29 10:00 - 10/29 10:40

Online

Organizer

Institute of Information Science

Activity Classification

Division

Division

Division of Mathematics and Physical Sciences

Category

Category

Lectures & Symposiums

Other Information

Target Audience

Target Audience

Age 15 and above

Contact: Maggie Chen,

02-27883799分機2203